More Information

Submitted: March 05, 2025 | Approved: March 22, 2025 | Published: March 23, 2025

How to cite this article: Bai L, Bai S. Feature Processing Methods: Recent Advances and Future Trends. J Clin Med Exp Images. 2025; 9(1): 010-014. Available from:

https://dx.doi.org/10.29328/journal.jcmei.1001035.

DOI: 10.29328/journal.jcmei.1001035

Copyright license: © 2025 Bai L, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Keywords: Feature processing; Artificial intelligence; Deep learning; Automated feature engineering; Data preprocessing; Feature selection; Dimensionality reduction

Feature Processing Methods: Recent Advances and Future Trends

Lufeng Bai1* and Shiying Bai1,2

1Jiangsu Second Normal College Nanjing, Jiangsu 211200, China

2Jiang Yan Second School, TaiZhou, 225500, China

*Address for Correspondence: Lufeng Bai, Jiangsu Second Normal College Nanjing, Jiangsu 211200, China, Email: [email protected]

This paper shows the developments and directions in feature processing. We begin by revisiting conventional feature processing methods, then focus on deep feature extraction techniques and the application of feature processing. The article also analyzes the current research challenges and outlines future development directions, providing valuable insights in related fields.

Feature processing is a vital element in artificial intelligence and machine learning, significantly influencing the performance and generalization abilities of models. Conventional feature processing techniques are insufficient for contemporary AI systems. Recent breakthroughs in deep learning have created new opportunities for feature processing, while automated feature engineering has emerged as a hotspot. This paper aims to show the latest advancements in AI feature processing methods, analyze current challenges, and prospect future trends to provide insights for related research.

Data processing

Conventional feature processing methods include data preprocessing, feature selection, and dimensionality reduction. Data preprocessing serves as the initial phase of feature processing, encompassing tasks such as data cleaning, detecting outliers, etc. Data cleaning involves processing noisy data and identifying erroneous values and deletion [1]. Outlier detection identifies outliers to prevent their negative impact on model training. Data standardization uses normalization methods to transform features into the same range, in order to improve the convergence speed.

Feature selection

Feature selection is that one choices the most relevant subset of features. Common approaches include filtering, wrapping, and embedding methods. The filtering technique assesses feature significance and mutual information. The packaging method combines the feature selection process with model training. Then, it selects the optimal feature subset. The embedding rule performs feature selection during model training, such as Lasso regression and decision tree algorithms. These methods each have their own advantages and disadvantages [1,2], and in practical applications, they need to be selected and combined.

Dimensionality reduction

Feature selection is to find the pertinent subset from the initial set. Typical strategies include filtering, wrapping, and embedding methods. The filtering approach evaluates the importance of features such as the chi-square test and mutual information. PCA transforms the original features into a set of linearly independent components. LDA is a supervised dimension reduction that seeks the optimal projection direction by maximizing inter class distance and minimizing intra class distance. In addition, nonlinear dimension reduction methods such as t-SNE and UMAP perform well in visualization and high-dimensional data analysis.

The growing complexity of data has revealed the inability of these AI techniques to process non-linear dependencies. As a result, the focus of research has shifted toward advanced deep learning-based methodologies.

Feature extraction with CNNs

The emergence of deep learning has transformed feature processing. Convolutional neural networks (CNNs) excel in image processing by learning hierarchical representations of features. CNN extracts local features through convolutional layers. It reduces feature dimensions through pooling layers. It integrates global information through fully connected layers. Thereby Efficient feature extraction of images is achieved. In computer vision tasks, pretrained CNN models have become standard tools for feature extraction [3-5].

Sequence modeling via RNNs

Recurrent Neural Networks (RNNs) [6-8] demonstrate strong feature extraction abilities when dealing with sequential data. RNNs are designed to capture temporal dependencies in sequence data while LSTMs address the issue of vanishing gradients during the training of long sequences. These models have achieved significant results in fields such as natural language processing.

Autoencoders & generative models

An autoencoder develops efficient data representations via encoding and decoding processes. It is used for feature reduction and denoising. The fundamental structure of an autoencoder includes an encoder and a decoder. They work together to learn lower-dimensional representations of data by minimizing reconstruction errors. Extended models such as Variational Autoencoder (VAE) and Generative Adversarial Network (GAN) [9-12] have further improved the expressive power of feature learning and performed well.

These methods can learn complex feature representations from raw data, greatly reducing the workload. However, they also face challenges such as poor interpretability and the need for large amounts of training data, which has driven the development of automated feature engineering.

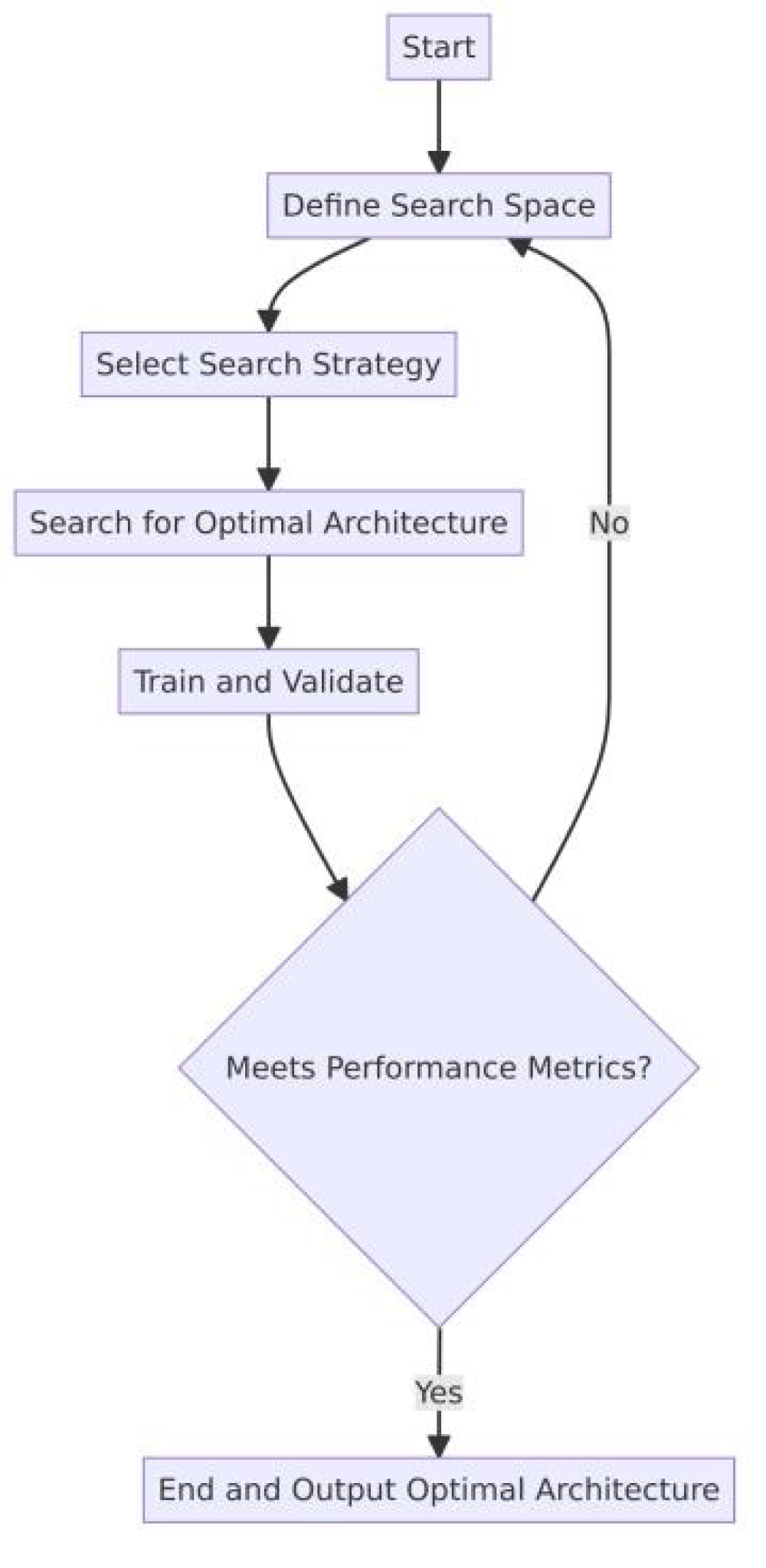

Neural architecture search

Automated feature engineering seeks to minimize manual involvement and enhance the efficiency. Neural Architecture Search (NAS) enhances the feature extraction process [13-17]. NAS employs techniques like reinforcement learning, and gradient optimization to automatically identify. This method not only improves model performance, but also greatly reduces the workload of manual work. For more details of NAS, (Figure 1).

Figure 1: NAS.

Meta-learning for feature adaptation

Meta-learning allows models to swiftly adjust to the feature processing. It needs of new tasks by learning the learning process itself. By training on multiple related tasks, meta-learning equips models to unfamiliar tasks. This approach is particularly effective in scenarios involving small sample learning.It offers innovative perspectives for feature processing.

This method performs well in small sample learning and cross domain transfer learning, providing new ideas for feature processing.

RL-based feature selection framework

The feature selection approach utilizing reinforcement learning adapts its strategy dynamically through interactions with the environment. It frames the feature selection task. It enables the agent to identify the optimal subset of features. Reinforcement learning techniques offer distinct benefits for managing high-dimensional data. It addresses dynamic feature selection challenges.

Reinforcement learning methods have unique advantages in dealing with high-dimensional data. It is same to dynamic feature selection problems [18-21].

These approaches are at the cutting edge of current research in feature processing [22,23], enhancing automation while also offering innovative strategies for managing high-dimensional and heterogeneous data [24]. However, how to balance the degree of automation with model interpretability, and how to efectively apply these methods to practical scenarios, is still a problem that needs further research [25, 26].

The application and challenges of feature processing in specific fields

Feature processing techniques have demonstrated significant promise across various AI application domains. In computer vision, these methods have enhanced the accuracy of image recognition and object detection. For instance, in the realm of medical image analysis, feature processing approaches that integrate domain knowledge can effectively identify lesion characteristics, aiding physicians in their diagnostic efforts. In the field of autonomous driving, multi-sensor data fusion are key to achieve environmental perception.

Enhance the depth of algorithm application and cross modal practice

In natural language processing, word embeddings and context aware feature representations significantly improve the performance of language models. Pre-trained language models learn universal language features through large-scale corpora. They perform well in downstream tasks. However, how to effectively handle multilingual text data and achieve effective feature representation in low resource languages, remains a problem to be solved.

In the technical domain, integrating advanced algorithms can significantly enhance the practical relevance of feature processing. For instance, CNNs [27] coupled with attention have demonstrated superior performance in identifying micro-cracks on metal surfaces. A comparative study on the NEU-DET dataset revealed a 15% accuracy improvement over conventional methods like Haralick texture analysis. In autonomous driving, multimodal feature fusion techniques address the challenges of heterogeneous sensor data. The Point Painting algorithm [28] effectively aligns LiDAR point clouds with camera images by projecting semantic labels from 2D images onto 3D points, achieving a 12% higher mAP on the nuScenes benchmark. Additionally, cross-domain adaptation of architectures, such as applying Transformer-based models (e.g., ESM-2 [29]) to protein sequence analysis, illustrates how NLP-inspired feature representations can advance bioinformatics tasks like fold recognition. These examples underscore the importance of tailoring deep learning frameworks to domain-specific constraints while leveraging interdisciplinary insights.

Deepen medical data-driven solutions

Effective feature processing is crucial for gene sequence analysis and protein structure prediction.

As to deep learning models, breakthroughs is achieved in protein structure prediction [30]. However, the complexity requires the development of feature processing methods. It is same to noise issues of biological data pose significant challenges to feature processing, Clinical applications demand robust feature processing methods. In breast cancer histopathology, Vision Transformers (ViTs) have outperformed traditional texture-based features (e.g., Haralick descriptors) by achieving an AUC of 0.92 versus 0.85 on the BreakHis dataset [31], attributed to capture global contextual patterns. Federated learning frameworks enable multi-institutional collaboration without patient privacy. For example, a federated feature extraction model improved malignancy detection F1-scores by 18% compared to single-center models [32]. Interpretability tools like Grad-CAM [33] bridge the gap between AI decisions and clinical trust. In lung nodule detection, aligning with radiologist’s diagnostic criteria (validated in a 2023 Nature Medicine study [34]). These advances emphasize the need for feature engineering that harmonizes technical innovation.

Addressing the challenges of robustness and real-time performance in industrial scenarios

Engineering applications require feature processing methods that address real-world robustness. In semiconductor wafer inspection, self-supervised learning frameworks like SimCLR [35] extract discriminative features, reducing false defect rates from 8% to 3% on the WM-811K dataset. For real-time systems, lightweight architectures such as MobileNetV3 [36] optimize feature extraction on edge devices. Deployed on NVIDIA Jetson Xavier, MobileNetV3 reduced inference latency from 50 ms to 20 ms while maintaining 98% accuracy in automotive object detection. Multimodal fusion also plays a pivotal role in predictive maintenance, and acoustic signals via hybrid models (e.g., wavelet-CNN [37] achieved 96% fault prediction accuracy (IEEE Transactions on Industrial Informatics, 2023 [38]). These examples highlight the necessity of balancing computational efficiency, and environmental variability in industrial AI systems.

These applications also face challenges, for example, how to handle feature fusion of multimodal data, how to deal with data scarcity and class imbalance. These challenges requires interdisciplinary collaboration.

As a core component of AI, feature processing methods are undergoing rapid development. From conventional methods to deep learning based feature extraction, new technologies are emerging in this field. In the future, feature processing research may pay more attention to small sample learning, interpretability, and other aspects. With the expansion of AI, developing more universal and efficient feature processing methods will become an important trend. We look forward to these advancements promote the development of AI to a higher level and providing strong support for innovative applications.

- Dhal P, Azad C. A comprehensive survey on feature selection in the various fields of machine learning. Appl Intell. 2022;52(4):4543-4581. Available from: https://link.springer.com/article/10.1007/s10489-021-02550-9

- Acosta JN, Falcone GJ, Rajpurkar P, Topol EJ. Multimodal biomedical AI. Nat Med. 2022;28(9):1773-1784. Available from: https://doi.org/10.1038/s41591-022-01981-2

- Alotaibi B, Alotaibi M. A hybrid deep ResNet and inception model for hyperspectral image classification. PFGC J Photogramm Remote Sens Geoinf Sci. 2020;88(6):463-476. Available from: https://link.springer.com/article/10.1007/s41064-020-00124-x

- Peng S, Huang H, Chen W, Zhang L, Fang W. More trainable inception-ResNet for face recognition. Neurocomputing. 2020;411:9-19. Available from: https://doi.org/10.1016/j.neucom.2020.05.022

- Barakbayeva T, Demirci FM. Fully automatic CNN design with inception and ResNet blocks. Neural Comput Appl. 2023;35(2):1569-1580. Available from: http://dx.doi.org/10.1007/s00521-022-07700-9

- Sherstinsky A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D Nonlinear Phenom. 2020;404:132306. Available from: https://doi.org/10.1016/j.physd.2019.132306

- Al-Selwi SM, Hassan MF, Abdulkadir SJ, Muneer A, Sumiea EH, Alqushaibi A, et al. RNN-LSTM: From applications to modeling techniques and beyond—Systematic review. J King Saud Univ Comput Inf Sci. 2024:102068. Available from: https://doi.org/10.1016/j.jksuci.2024.102068

- Shewalkar A, Nyavanandi D, Ludwig SA. Performance evaluation of deep neural networks applied to speech recognition: RNN, LSTM and GRU. J Artif Intell Soft Comput Res. 2019;9:235-245. Available from: http://dx.doi.org/10.2478/jaiscr-2019-0006

- Gao R, Hou X, Qin J, Chen J, Liu L, Zhu F, et al. Zero-VAE-GAN: Generating unseen features for generalized and transductive zero-shot learning. IEEE Trans Image Process. 2020;29:3665-3680. Available from: https://doi.org/10.1109/tip.2020.2964429

- Tian C, Ma Y, Cammon J, Fang F, Zhang Y, Meng M. Dual-encoder VAE-GAN with spatiotemporal features for emotional EEG data augmentation. IEEE Trans Neural Syst Rehabil Eng. 2023;31:2018-2027. Available from: https://doi.org/10.1109/tnsre.2023.3266810

- Ibrahim BI, Nicolae DC, Khan A, Ali SI, Khattak A. VAE-GAN based zero-shot outlier detection. In: Proceedings of the 2020 4th international symposium on computer science and intelligent control. 2020. Available from: https://doi.org/10.1145/3440084.3441180

- Mukesh K, Ippatapu VS, Chereddy S, Anbazhagan E, Oviya IR. A variational autoencoder general adversarial networks (VAE-GAN) based model for ligand designing. In: International Conference on Innovative Computing and Communications: Proceedings of ICICC 2022, Volume 1. Singapore: Springer Nature Singapore; 2022. Available from: https://www.amrita.edu/publication/a-variationalautoencoder-general-adversarial-networks-vae-gan-based-model-for-ligand-designing/

- Elsken T, Metzen JH, Hutter F. Neural architecture search: A survey. J Mach Learn Res. 2019;20(55):1-21. Available from: https://www.jmlr.org/papers/volume20/18-598/18-598.pdf

- Ren P, Xiao Y, Chang X, Huang PY, Li Z, Chen X, et al. A comprehensive survey of neural architecture search: Challenges and solutions. ACM Comput Surv. 2021;54(4):1-34. Available from: https://arxiv.org/abs/2006.02903

- Chitty-Venkata KT, Somani AK. Neural architecture search survey: A hardware perspective. ACM Comput Surv. 2022;55(4):1-36. Available from: http://dx.doi.org/10.1145/3524500

- Li L, Talwalkar A. Random search and reproducibility for neural architecture search. In: Uncertainty in artificial intelligence. PMLR; 2020. p. 367-377. Available from: https://arxiv.org/abs/1902.07638

- Lindauer M, Hutter F. Best practices for scientific research on neural architecture search. J Mach Learn Res. 2020;21(243):1-18. Available from: https://doi.org/10.48550/arXiv.1909.02453

- Mousavi SS, Schukat M, Howley E. Deep reinforcement learning: an overview. In: Proceedings of SAI Intelligent Systems Conference (IntelliSys) 2016: Volume 2. Springer International Publishing; 2018. Available from: https://doi.org/10.48550/arXiv.1806.08894

- Ding Z, Huang Y, Yuan H, Dong H. Introduction to reinforcement learning. Deep reinforcement learning: fundamentals, research and applications. 2020:47-123. Available from: http://dx.doi.org/10.1007/978-981-15-4095-0_2

- Mosavi A, Faghan Y, Ghamisi P, Duan P, Ardabili SF, Salwana E, et al. Comprehensive review of deep reinforcement learning methods and applications in economics. Mathematics. 2020;8(10):1640. Available from: https://doi.org/10.3390/math8101640

- Barto AG. Reinforcement learning: An introduction. SIAM Rev. 2021;6(2):423.

- Gahar RM, Arfaoui O, Hidri MS, Hadj-Alouane NB. A distributed approach for high-dimensionality heterogeneous data reduction. IEEE Access. 2019;7:151006-151022. Available from: https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=8861035

- Yilmaz Y, Aktukmak M, Hero AO. Multimodal data fusion in high-dimensional heterogeneous datasets via generative models. IEEE Trans Signal Process. 2021;69:5175-5188. Available from: https://doi.org/10.48550/arXiv.2108.12445

- Pölsterl S, Conjeti S, Navab N, Katouzian A. Survival analysis for high-dimensional, heterogeneous medical data: Exploring feature extraction as an alternative to feature selection. Artif Intell Med. 2016;72:1-11. Available from: https://doi.org/10.1016/j.artmed.2016.07.004

- Rabiee M, Mirhashemi M, Pangburn MS, Piri S, Delen D. Towards explainable artificial intelligence through expert-augmented supervised feature selection. Decis Support Syst. 2024;181:114214. Available from: https://doi.org/10.1016/j.dss.2024.114214

- Aguilar-Ruiz JS. Class-specific feature selection for classification explainability. ArXiv Preprint. 2024. Available from: https://doi.org/10.48550/arXiv.2411.01204

- Woo S, Park J, Lee JY, Kweon IS. CBAM: Convolutional block attention module. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2018. Available from: https://doi.org/10.48550/arXiv.1807.06521

- Vora S, Lang AH, Helou B, Beijbom O. PointPainting: Sequential fusion for 3D object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2020. p. 4604-4612. Available from: https://doi.org/10.48550/arXiv.1911.10150

- Lin Z, Akin H, Rao R, Hie B, Zhu Z, Lu W, et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science. 2023;379:1123-1130. Available from: https://doi.org/10.1126/science.ade2574

- Rostami M, Oussalah M. A novel explainable COVID-19 diagnosis method by integration of feature selection with random forest. Inform Med Unlocked. 2022;30:100941. Available from: https://doi.org/10.1016/j.imu.2022.100941

- Panhol FA, Oliveira LS, Petitjean C, Heutte L. A dataset for breast cancer histopathological image classification. IEEE Trans Biomed Eng. 2016;63(7):1455-1462. Available from: https://doi.org/10.1109/tbme.2015.2496264

- Li X. Federated feature learning for mammography diagnosis with privacy preservation. Nat Digit Med. 2022;5:123.

- Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Proc IEEE Int Conf Comput Vis (ICCV). 2017;618-626. Available from: https://doi.org/10.48550/arXiv.1610.02391

- Wang H. Interpretable AI for lung nodule malignancy prediction in low-dose CT. Nat Med. 2023;29(6):1430-1438.

- Chen T, Kornblith S, Norouzi M, Hinton G. A simple framework for contrastive learning of visual representations. Proc Int Conf Mach Learn (ICML). 2020;119:1597-1607. Available from: https://proceedings.mlr.press/v119/chen20j.html

- Howard A, Sandler M, Chu G, Chen LC, Chen B, Tan M, et al. Searching for MobileNetV3. Proc IEEE/CVF Int Conf Comput Vis (ICCV). 2019;1314-1324. Available from: https://openaccess.thecvf.com/content_ICCV_2019/html/Howard_Searching_for_MobileNetV3_ICCV_2019_paper.html

- Zhang Y. Wavelet-CNN for mechanical fault diagnosis under noisy environments. Mech Syst Signal Process. 2021;152:107413.

- Gupta A. Multimodal sensor fusion for predictive maintenance in Industry 4.0. IEEE Trans Ind Inform. 2023;19(7):4321-4332.

- Jumper J, Evans R, Pritzel A, Green T, Figurnov M, Ronneberger O, et al. Highly accurate protein structure prediction with AlphaFold. Nature. 2021;596:583-589. Available from: https://www.nature.com/articles/s41586-021-03819-2